Artificial Intelligence is not a myth anymore. It has become a part of daily life of an average worker: 78% of workers now use AI tools 1in their jobs and nearly two-thirds engage with them daily using it to make notes, conduct research and much more. It has also seen an increase in adoption across industries but the underlying current of AI confidence seems to be low. Employees are quick to question: “Should I use AI for this problem” or “Can AI solve this”, “Can AI systems be trusted” and so on.

How does one build AI confidence into an organization so workers are not questioning the extent to which AI can be integrated into their workflows. We will be discussing what AI confidence is, threats of AI sprawl and how to navigate AI sprawl and how to successfully build AI confidence into your organization as a guiding leader.

What is AI confidence

AI confidence refers to the ability of employees’ belief and comfort which hinges on trust, comfort, capability and ability to understand its impact in the workplace. Why is AI confidence a core component for successful AI adoption? If the workers are confident in the AI system abilities, trust the outputs and see AI as a partner rather than a threat. This fosters adoption and opens new ways to innovate.

While we would want our workers to be highly confident in AI in being applied in the workplace. In reality however, a study found that AI confidence remains uneven. While 69% feel very or extremely confident using AI tools, 23% harbor concerns about safe usage, and 8% lack confidence entirely. Not only this but a PWC study found that 67% of CEOs2 think that AI and automation (including blockchain) will have a negative impact on stakeholder trust in their industry over the next five years. This lingering doubt remains to be tackled and manifests itself with the help of AI sprawl

Threat of AI sprawl

What is AI sprawl & Cost of ignoring AI sprawl

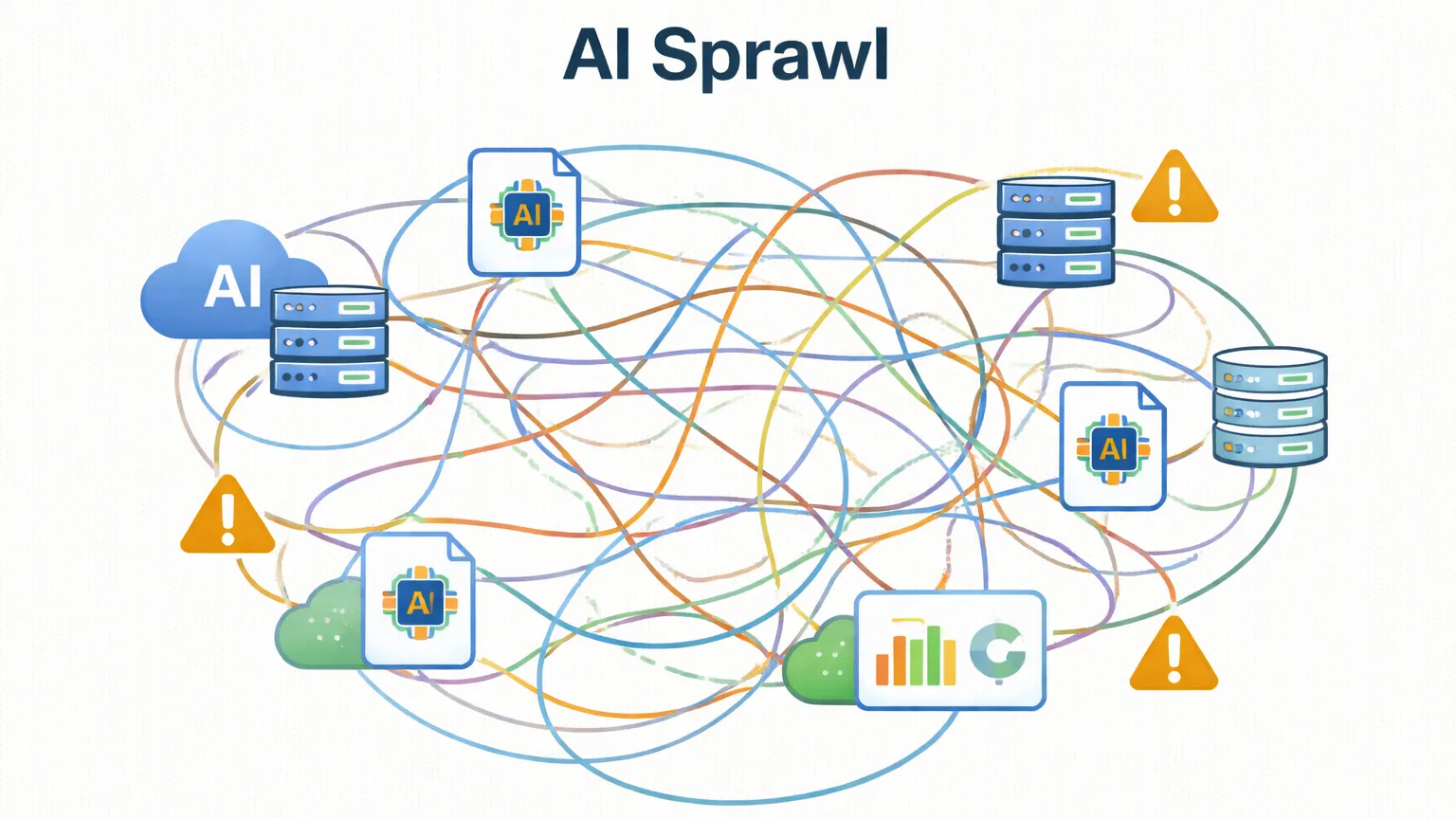

AI sprawl occurs when multiple tools, models and platforms are used across an organization without centralized visibility and governance. Business functions adopt AI solutions independently. This often results in duplication of efforts and inconsistent data. When AI adoption is not well thought out to fit your organization needs, this introduces hidden costs like wastage of resources and redundant tools and security risks with fractured insights across teams. Ignoring AI sprawl erodes confidence in AI but weighs down the ideas of innovation and operational friction.

So what is an adequate solution for AI sprawl? Traditional governance is not enough to control AI sprawl. To manage AI sprawl, enterprises must adopt a governance model that moves the same speed as their AI so new rules and regulations are marked into visibility the moment an issue arises rather than being highlighted later on when the model is live.

AI sprawl vs AI confidence comparison

Here a quick breakdown of how AI sprawl and AI confidence to understand how each affects the organization:

| Aspect | AI Sprawl | AI Confidence |

| Definition | Disconnected, uncontrolled use of AI tools and models across teams; fragmented infrastructure | Coordinated, governed, and observable AI usage across the enterprise; predictable and trusted outcomes |

| Scope of Use | Ad-hoc experiments, departmental pilots, shadow IT | Enterprise-wide, aligned to business objectives, centralized orchestration |

| Data Management | Silos, inconsistent formats, unvalidated inputs | Structured, clean, governed, and validated data pipelines |

| Model Governance | No standard evaluation, versioning, or audit; multiple overlapping models | Centralized model registry, approval workflows, version control, and monitoring |

| Performance & Monitoring | Limited visibility, inconsistent outputs, unknown drift | Continuous monitoring, performance tracking, and automated alerts |

| Compliance & Risk | High exposure: regulatory, security, and reputational risks | Built-in compliance, logging, and risk mitigation; reduces operational and legal risk |

| Cost & Resource Efficiency | Redundant tools, duplicated efforts, uncontrolled cloud/compute spend | Optimized model usage, reduced duplication, cost-aware routing |

| Decision-Making | Unreliable insights, inconsistent results, low trust from executives | Trusted insights, predictable results, high executive confidence |

| Outcome for Enterprise | Wasted budgets, stalled AI adoption, lack of scalability | Measurable ROI, scalable AI adoption, repeatable business value |

| Organizational Impact | Friction, skepticism, risk aversion, slow innovation | Alignment across business and technical teams, empowered decision-making, faster innovation |

Why should Enterprises should care about AI confidence

Over the past three years, AI has been a hot topic among stakeholders and they are eager to deploy those models within their own organizations. However, AI models without correct governance, oversight and accountability still carry risk at the end of the day and might fail. In such scenarios it is necessary to focus on Responsible AI. Responsible AI is a framework for developing and using artificial intelligence ethically. It focuses on core principles like: fairness and inclusivity, transparency, accountability, privacy and reliability. With these principles at the core of any AI innovation, enterprises are more likely to innovate safely and securely.

How can Enterprises build AI confidence

So how to build AI confidence in enterprises? AI should be managed as any other technology used by your enterprise. PwC outlines the following five key foundational principles that need to be in place before you should focus on hardlaunching any AI project:

- Ensure clarity over AI strategy

Enterprises intending to use AI should be clear on the direction they want to take with it and answering tough questions like: “What to be careful of when using AI?”, “Is it safe to trust AI”, “What are the risks with AI” and many more before committing to any type of AI project.

- Transparency by design

With the intention to integrate AI into the enterprise system, it is necessary to build awareness and transparency with your stakeholders and end users like your customers and guide them why they should trust your judgement of including AI into your organization. There should be a balance and check for the AI so it remains controlled.

- Build your AI organization in advance

It’s important to ensure cross-organisational communication, collaboration and centralised coordination of AI initiatives.

- Build data management into AI

Create and manage new ways to keep data clean and controlled in a secure environment avoiding data leakages.

- Integrate assurance into your AI model

Assurance in AI is not limited to upgrading the AI model but it requires business wide evaluation to gauge outcomes, risks and looking out for opportunities.

Build AI confidence with Beam Data

The path to AI Confidence starts with structure, visibility, and governance exactly what Beam Data provides. Whether your enterprise needs a centralized AI Hub to orchestrate multiple models, enforce governance, and monitor performance, or a customized AI solution tailored to your unique workflows and business objectives, Beam helps organizations move from fragmented experimentation to confident, business-led AI adoption. By aligning AI initiatives with strategic goals, consolidating tools, and providing clear oversight, Beam enables enterprises to reduce costs, increase trust, and scale AI safely across the organization.

FAQ: AI Confidence

1. What is AI Confidence?

AI Confidence is the ability to trust AI systems to deliver reliable, governed, and business-aligned outcomes at scale.

2. What causes organizations to lose AI Confidence?

AI sprawl, siloed data, lack of governance, and poor visibility into AI usage and performance.

3. How can enterprises build AI Confidence quickly?

By centralizing AI through an Beam Data’s AI Hub or customized solution, aligning use cases to business goals, and enforcing governance.

Sources & References

- Jon Swartz, “AI in the Workplace: The Confidence Gap Behind Widespread Adoption,” Techstrong.ai, October 12, 2025, https://techstrong.ai/agentic-ai/ai-in-the-workplace-the-confidence-gap-behind-widespread-adoption/. ↩︎

- Responsible Artificial Intelligence: How to Build Trust and Confidence in AI (PwC Switzerland, 2017), https://www.pwc.ch/en/publications/2017/pwc_responsible_artificial_intelligence_2017_en.pdf ↩︎